When the spectrum of plausible outcomes includes an appreciable portion of white-collar tasks being automated, or a tenth of all electricity in America being used for AI training and deployment, good forecasts matter.

Sam Altman of OpenAI and Dario Amodi CEO of Anthropic taunt customers with the idea that ‘Artificial General Intelligence’ will arrive in 2026 whilst vendors and enterprises of all kinds send marketing messages of the large extent to which Generative AI is being deployed.

The fact that they label any AI tool deployment as is if it is Generative AI is a sign of not wanting to be seen as being left behind by more innovative competitors. Yet the truth is that real Generative AI deployments are only seen automating boring, well understood, highly predictable and repetitive, high volume processes, where the costs of inaccurate decisions are neither business nor life threatening. The well documented inbuilt fallibilities of LLMs mean that outputs must be protected by rigorous guardrails and constant oversight to prevent threats to security, compliance, and poor service.

‘Whatever the LLM executives say, AI is changing business much more slowly than expected. A high-quality survey from America’s Census Bureau finds that a mere 10% of firms are using it in a meaningful way. “Enterprise adoption has disappointed,” notes a recent paper by UBS, a bank’.

Source: The Economist ‘Why is AI so slow to spread? Economics can explain’ July 17, 2025

10% is not insubstantial and reflects the real lead-times that scaling and operationalising new technologies really takes. The corollary though is that the large language model (LLM) vendors may find themselves in the trap of not securing real recurring revenues, but their costs and funding needs continue to accelerate. They may find a market correction and investor scrutiny leaves them too exposed leaving the future to competitors with viable funding, solving real problems and working in specialist areas e.g. the legal, insurance, healthcare, defence fields with outputs based on specialist language models in private cloud ecosystems.

That does not mean that AI will not advance nor its impacts on humanity, business and governments be considerable; just that it will take longer.

That is why the LEAP project caught my attention.

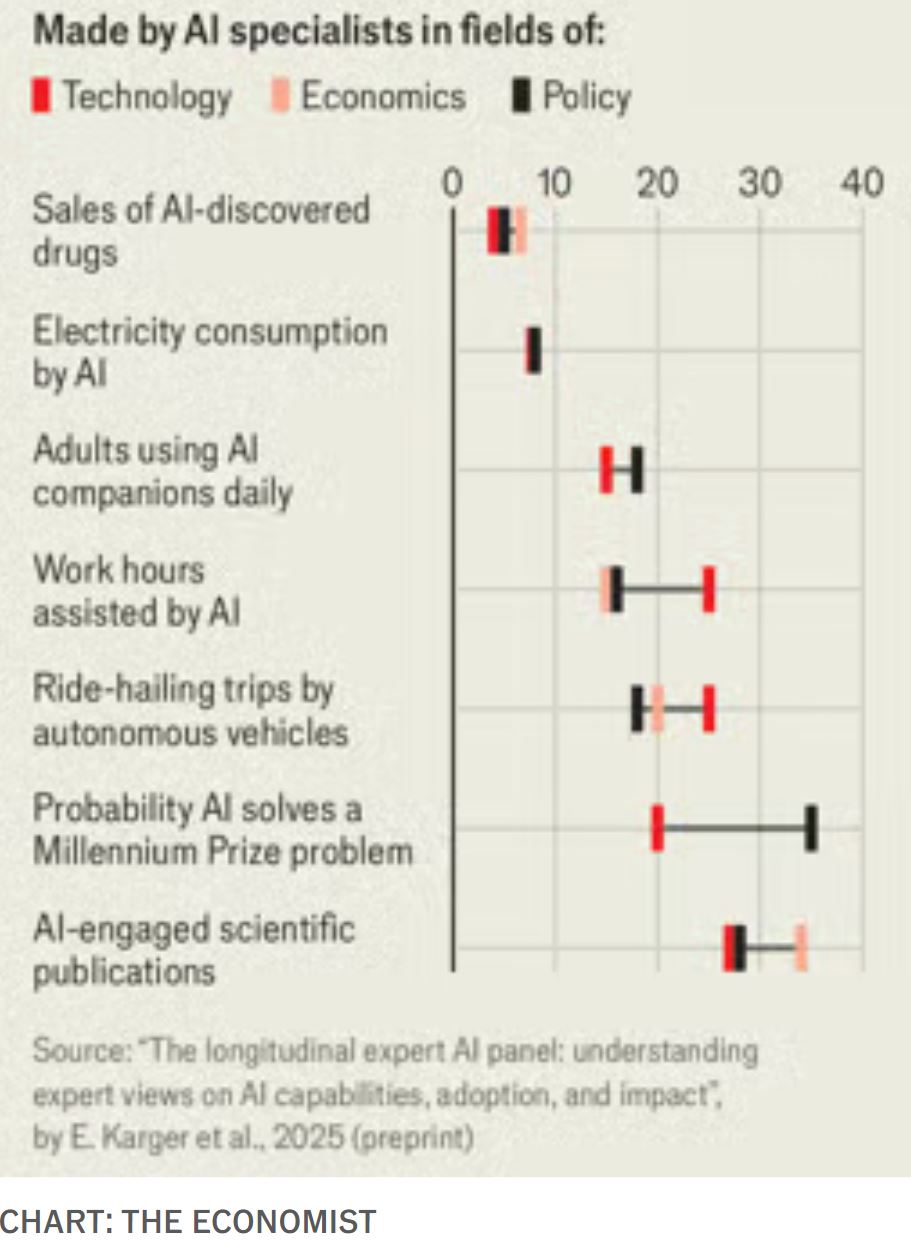

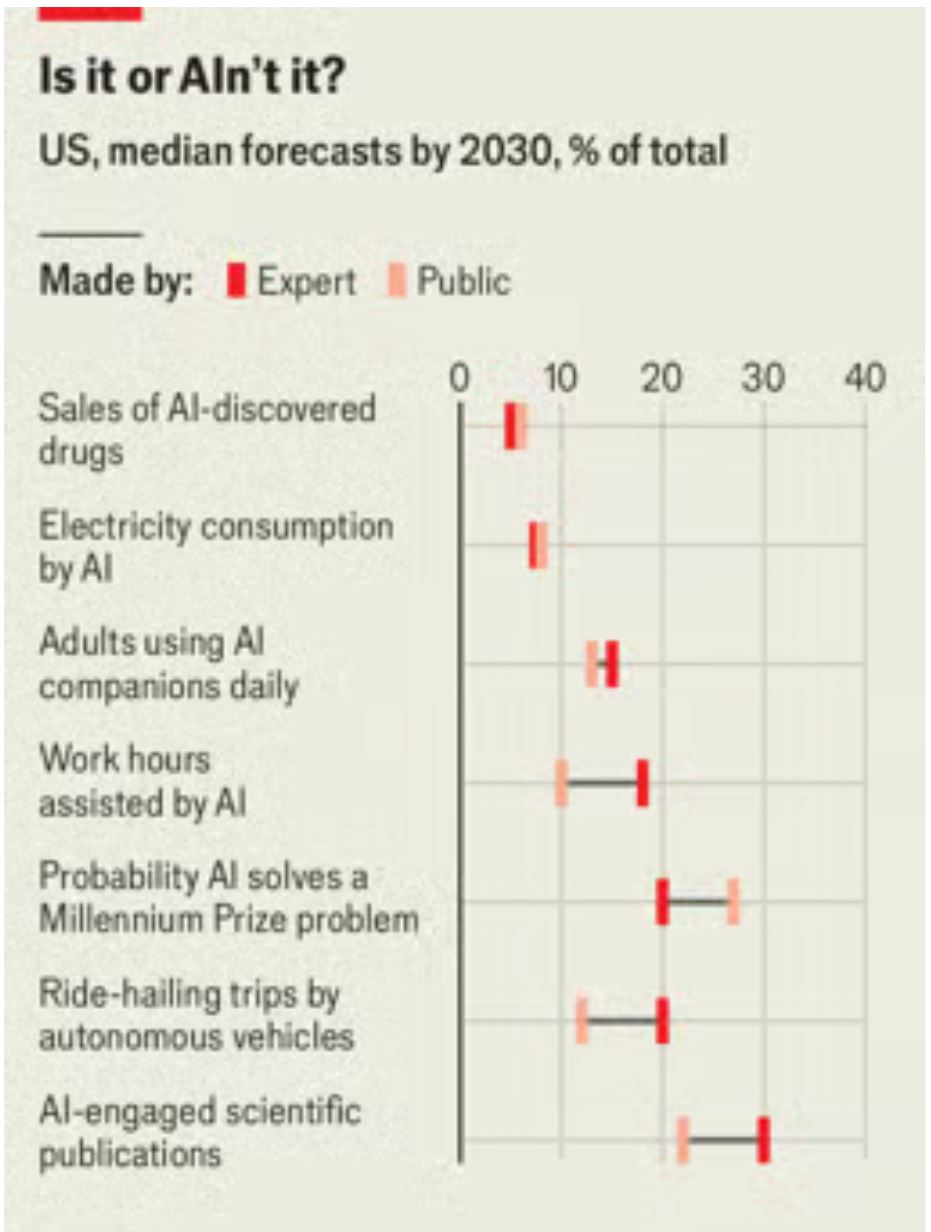

The Longitudinal Expert AI Panel (LEAP) sets out to do three things. First, rather than assessing vague claims about concepts like AGI, it offers specific, testable hypotheses. When will self-driving cars account for 20% of American ride-hailing trips? What proportion of the country’s electricity will be used for AI by 2040? What will be the benchmark scores for open-source and proprietary AI models in 2025, 2027 and 2030?

Ezra Karger, an economist at the Federal Reserve Bank of Chicago runs the LEAP project and will combine analysis and predictions from 350 experts from many different fields: -

- Corporate AI analysts

- Academic computer scientists

- Economists

- ‘Superforecasters’ with a track record of being more accurate that experts

The results of the first round, published on November 10th, suggest AI’s impacts are just beginning to be felt. The median forecast has more than 18% of American work hours being AI-assisted by 2030, up from 2% in September this year. The forecasters expect that AI will account for about 7% of American electricity usage by the same year.

How about the longer term- 2040?

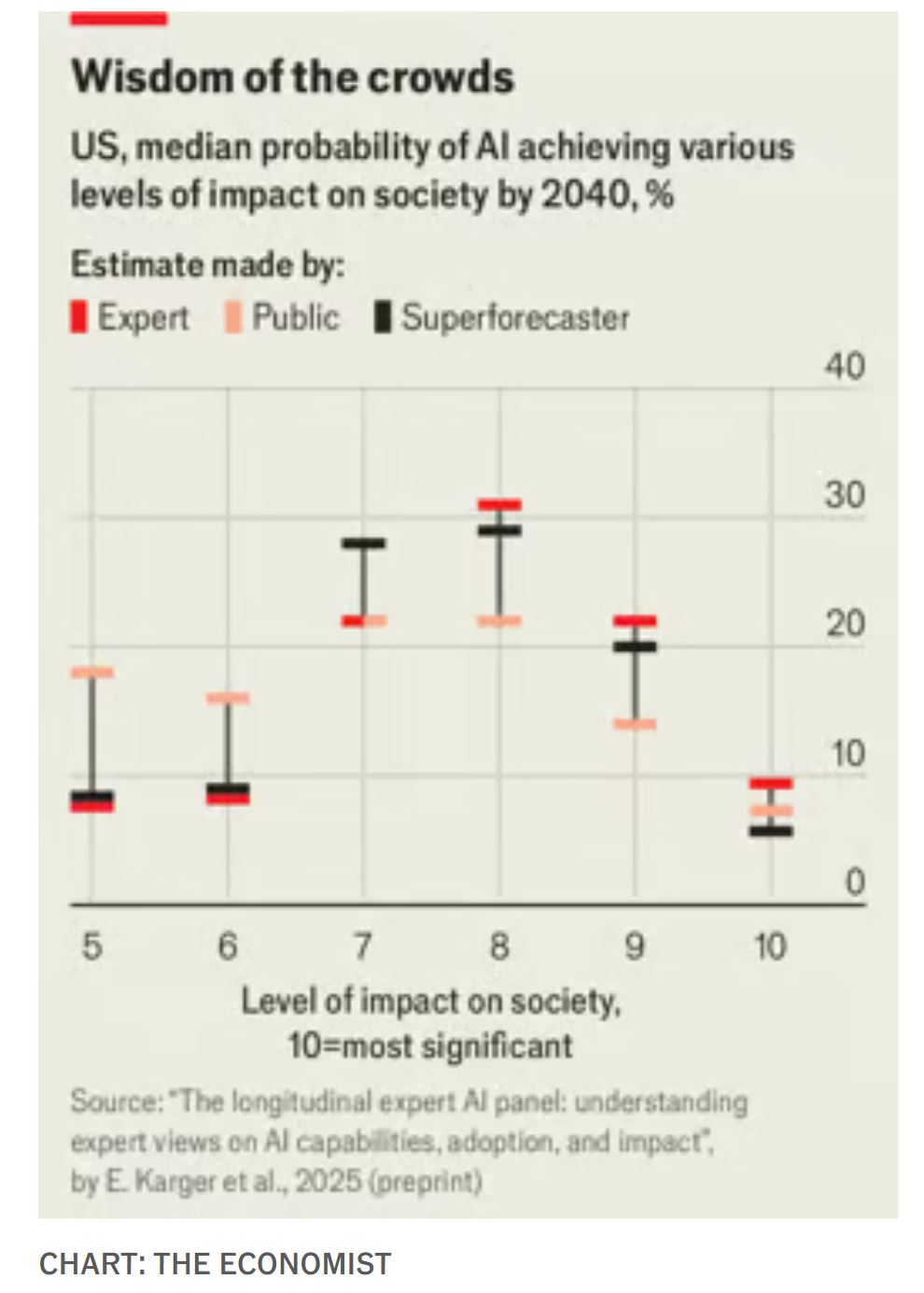

By 2040, they expect AI to be as important to this century as electricity or the car were to the previous one—a score of eight on a ten-point scale devised by Nate Silver, a statistician, designed to measure the impact of different inventions. They also thought there was a nearly one-in-three chance that AI might rank at least as high as level nine, where it would join technologies like the printing press as a technology that “changed the course of human history”.

Far less than the marketing messages of LLM vendors and the BIG Tech vendors but still impressive. It does imply that the obsolescence built into current LLM technologies and the longer time scales mean that the AI firms that dominate in 2040 will be different than many in the forefront today.

The leaders of the big three artificial-intelligence (AI) labs promise great things, and soon. Sam Altman, the boss of OpenAI, thinks next year computers will be capable of “novel insights”. Dario Amodei, who runs Anthropic, says “powerful AI” (what others call AGI—artificial general intelligence) could arrive in the same timeframe. Demis Hassabis of DeepMind has suggested that “within the next decade or so”, AI could cure all diseases.

Some of the grandest claims may be made with at least one eye on marketing. Still, getting a true sense of the probable speed of AI development is important, says Ezra Karger.

The LEAP forecasters doubted that AI would meet the loftiest expectations of its boosters—or at least, not as quickly as they claim. The average expert thought there was only a one in five chance of developing Mr Amodei’s “powerful AI” by 2030.

CHART: The Economist

On the other hand, rapid progress in the field has caught out even the experts before. When the fieldwork for the current report was done in April, the top score for an AI system on a tricky maths challenge called Frontier Math was 19%. The median expert guess for where it would be by the end of 2025 was 31%. In a parallel study asking the general public the same questions, the median guess was 27%. But in August Google announced a score of 29%, beating many forecasts—and with four months left in which to get better still.

Dr Karger will ensure the LEAP project constantly measures actual outcomes against forecast to identify the consistently best forecasters and refine the predictive modelling. That is easier on shorter time scales- r.g. taking 2030 as the year of delivery.

I would far rather base my business and operational AI planning around these models from LEAP rather than the siren claims of the LLM vendors and many service providers pretending, for example, that agentic AI is already deployable, scalable and reliable.

How about you?

The leaders of the big three artificial-intelligence (ai) labs promise great things, and soon. Sam Altman, the boss of Openai, thinks next year computers will be capable of “novel insights”. Dario Amodei, who runs Anthropic, says “powerful ai” (what others call agi—artificial general intelligence) could arrive in the same timeframe. Demis Hassabis of DeepMind has suggested that “within the next decade or so”, ai could cure all diseases.

unknownx500

unknownx500