Before I answer that BCG has just published a report “ Insurance Leads in AI Adoption. Now It’s Time to Scale” that seques neatly into ideal optimising use cases ( see further reading for a link to download this report). It describes two opposing forces at work.

- Insurance is one of the leading industries embracing AI

- It has not overcome the challenges of operationalising and scaling AI

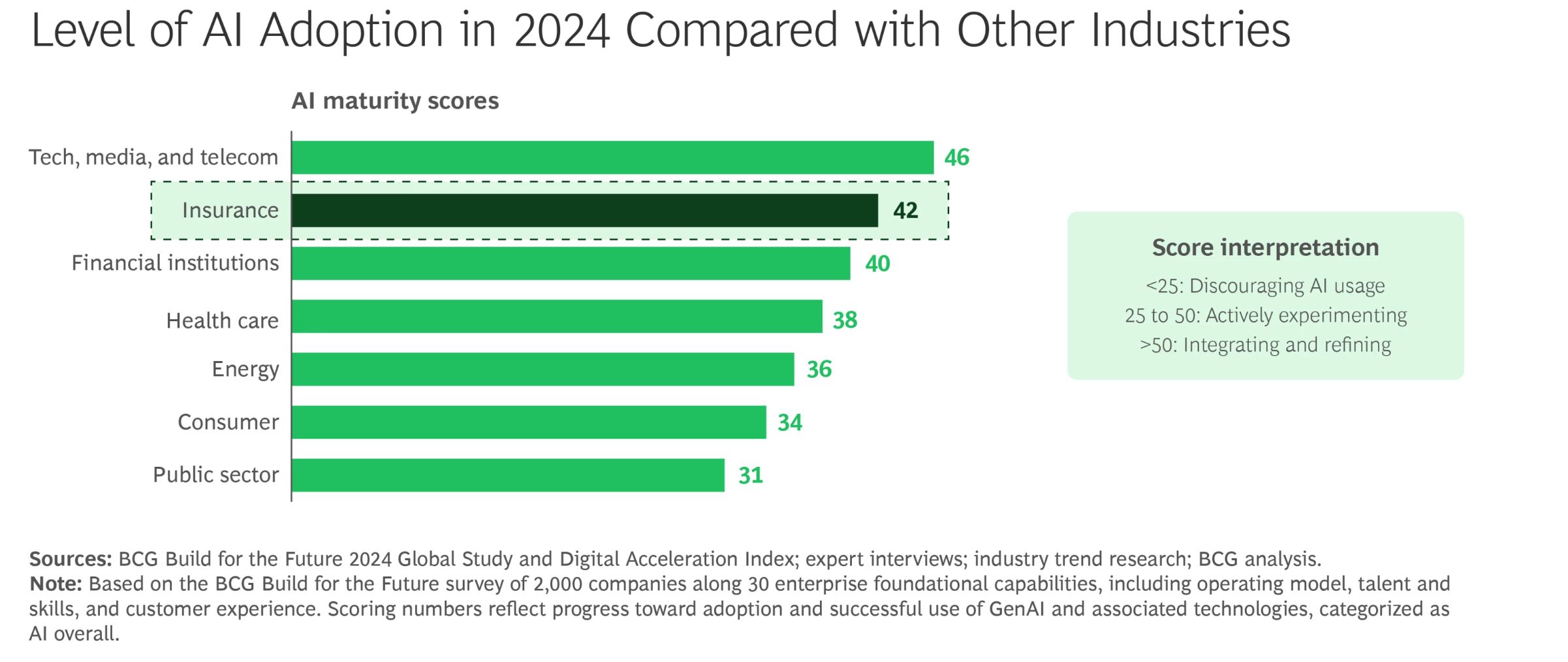

Insurance, AI and the level of AI adoption

Now one caveat in such studies is that AI covers such a wide spectrum. Not just LLMs, Generative AI and Agentic AI , but a wide range of proven and mature tools.

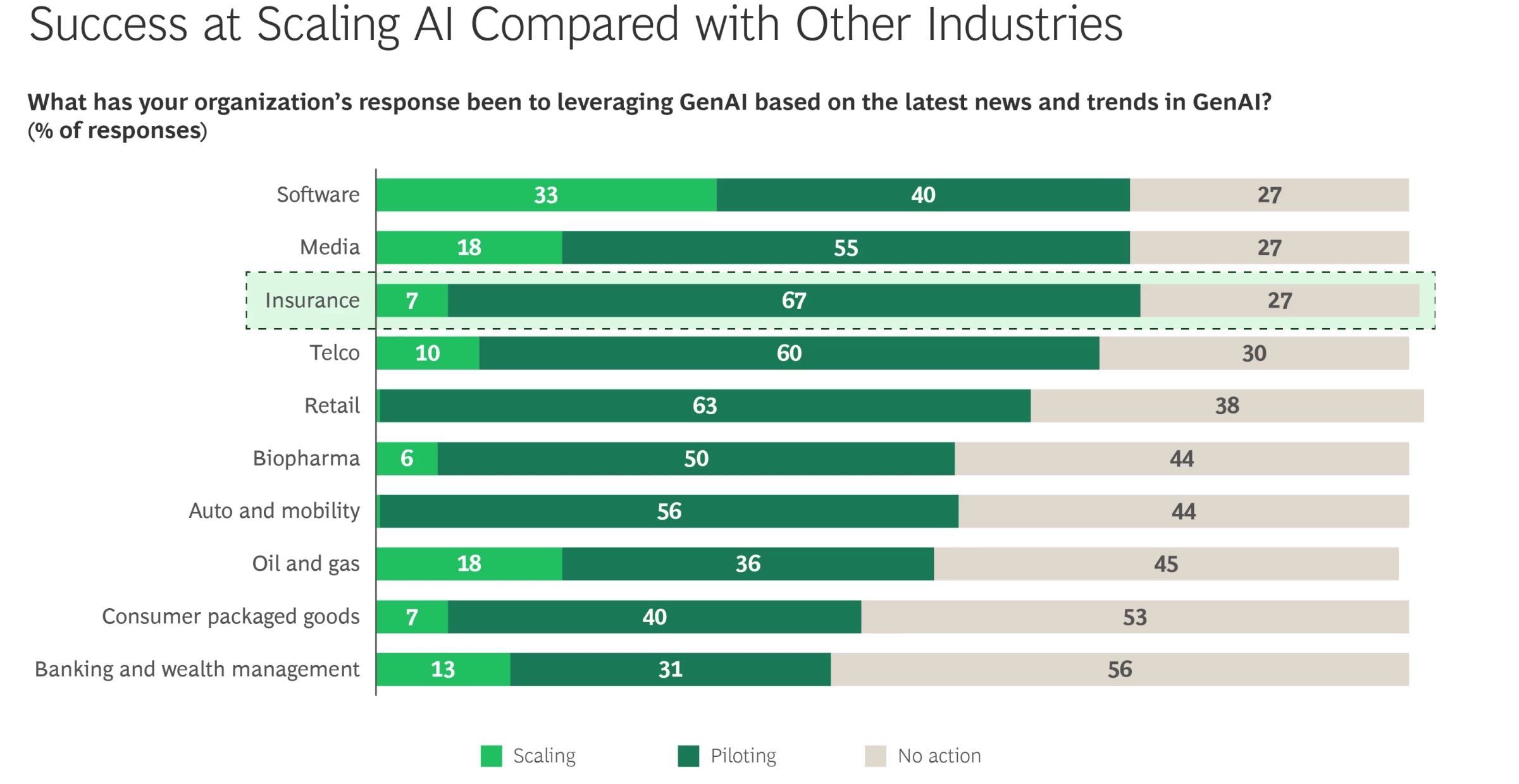

Success at scaling AI

Insurance is no more successful than other industries in scaling AI in this BCG chart

Over two-thirds of projects are mere pilots with 7% involving scaling. That is not a suprise really as the inherent flaws in LLMs and Generative AI products cause great technology challenges. And all enterprise technology staff usually prefer to experiment with new tools and widgets rather than focus on important business issues. After all, the day-to-day burdens of maintaining legacy technology need lightening with some fun but this leads to what McKinsey describes as ‘Pilot Purgatory’. The 80% to 90% of such projects failing to advance beyond the pilot because the pilot was badly planned. with no compelling problem in mind.

This is confirmed by an innovator, Maarten Ectors, who was once the Chief Innovation Officer at Legal & General Group (Health, Life, Pensions and Investment). Prior to that he was Chief Digital Officer at the P&C subsidiary L&G where I was a technology partner and first met Maarten. He is founder of a number of companies including Greentic.ai an ‘open source platform to create armies of digital workers’. So he has skin in the LLM, SLM, GenAI and Agentic AI field and if the reality matched the hype of Sam Altman you would expect Ectors to describe exciting use cases. Instead, he helps large enterprises ‘discover and launch the best AI use cases’ which turn out to be mundane.

His experience is that today those use cases should be focussed on boring tasks. He has helpfully described his frontline experiences and advice and given me permission to publish these below.

Where to best apply AI in your enterprise

by Maarten Ectors

It looks like a trivial question but there are so many different use cases, tools and solutions, so it is not surprising many companies are struggling with where to best apply AI. Maarten has amassed a clear set of does and don’ts.

Don’t focus on what humans don’t know how to do

Too many companies focus their AI efforts on trying to solve the really challenging business problems for which their experts don’t have a solution. These types of AI projects tend to fail because AI is like a teenager, you need to be able to tell it what to do and validate they are doing it correctly. If you don’t know how to do it, don’t expect some magical AI to do it for you.

Don’t focus on the technology, focus on the boring business challenges with high ROI

There is a lot of AI technology that is really cool and lots of technical teams will be really excited to experiment with it. Non-technical teams like the no-code drag-and-drop solutions. Again, you are unlikely to have a big impact. You need to start with the boring business challenges. What is it that most employees do or customers ask for on a daily basis and find rather boring and time consuming? These activities are very well understood and if they can be automated will have a high return on investment [ROI]. Any resistance to change is probably minimal if you can actually give employees more time to do more interesting work.

Don’t introduce new tools if you can avoid them

Any new tool which needs to be deployed in an organisation, often comes with high costs related to training and change management. Can you enable AI in an existing tool or can you use an existing platform, e.g. Microsoft Messaging, Slack,... and talk to a new assistant?

Don’t automate the past, rethink the future

You can use AI to scan a handwritten document and try to read it so it can be automatically processed. You can have a call centre agent using an assistant to support customers better. Both could have a reasonable business case but nothing compared to doing away with handwritten documents and letting customers directly talk to smart assistants without having to wait in a call centre queue. Rethink the future when you are applying AI, don’t automate the past. You can start with an assistant for call centre agents but only if the next step is to connect it directly to customers.

Don’t try to automate 100%, focus on human-assisted AI

Many companies have processes with lots of edge cases. Human experts are great at resolving edge cases. Let the AI focus on the 80% to 95%, not on the remaining 5% to 20%. AI can potentially automate 50% to 80% straight away. However AI can make dumb mistakes, so start with human-assisted AI. AI looks at a challenge or work order and proposes a strategy. A human expert has a traffic light system, green: go ahead, red: stop, let me take over, orange: adapt and proceed. The goal is to get as much green and red as possible. Red is ok at this stage. It means that these use cases should still go to humans or a more advanced solution can be implemented in the future. Green can be fully automated as soon as enough times the process has always been green. Orange is where the focus should be. You want to make the orange category smaller each day. Orange is what holds you back from fully automating green or clearly deciding what will go to human experts or needs a different approach.

Decentralise AI

The worst strategy is to have a centralised AI team with a long queue of people wanting to either AI enable their work or make small changes to improve the AI process [e.g. move from orange to green]. You want to decentralise AI and enable different teams to improve and automate the company’s business processes and boring tasks in parallel. Think about it this way. If your customers have access to an AI assistant, what would they prefer? Wait until the AI team has time to focus on sales and services, after all support processes have been automated? Or allow sales, services and support to all work in parallel on automating 80% of their repetitive customer requests? You can lose a lot of time if you create a centralised queue where domain experts need to explain technical AI teams what they want. Try to focus on solutions where domain experts can configure the rules and processes and AI experts are more focusing on system integrations, fine-tuning models,... As soon as AI can call a system, the domain expert can go from using this integration for basic tasks to more advanced and complex tasks. If they need to submit a change request and wait 3 months, then your customers will be waiting as well, while your competitors are not.

Prototype and productise in different ways

It is ok for a team to use an online AI drag-and-drop product or some AI coded python to create a quick and dirty prototype. The first step is to validate how to solve a problem. Once you know how to solve a problem, you can invest time in the other aspects like costs, performance, privacy, compliance,... The AI production system might be different from the quick-and-dirty prototype, but prematurely focusing on aspects like costs, performance, privacy, compliance,... can make AI projects that don’t really solve an issue correctly, too slow and too costly. First learn and then optimise.

Not using AI is not an option. Speed is critical

Not doing anything because AI will change in the future sounds like an attractive option for some. The issue is that if a competitor manages to learn to use AI to make their employees 10x, 100x or even more productive, then this might mean they take over the market. AI can change the business rules and you don’t want to be on the wrong side of such an industry change. If you are a taxi company, then a robotaxi company can make your industry irrelevant. Waiting is the opposite of what you need to do. You should use the time to do experiments on how future AI-enhanced products can make your company grow, even if yesteryear’s products get disrupted by AI.

Need more help?

AI can be challenging and your problems might be different than what is discussed here. In that case why don’t we talk?

Maarten Ector's advice is practical and certainly not a theoretical construct. It matches feedback I have from various sources and the BCG report with the added advantage that he is helping enterprises to scale AI today and in the longer term. Tactically and strategically.

Large Language Models or Small Language Models?

LLMs and SLMs both have a part to play. There is a line of thought that LLM innovative advances have slowed and businesses are focussing more on SLMs.

"Large Language Models or Small Language Models?

LLMs and SLMs both have a part to play. There is a line of thought that LLM innovative advances have slowed.

‘Companies have moved on from a spend-whatever-it-takes approach, employed in the early days of generative AI, to a greater focus on return on investment. Though they may still use LLMs for many tasks, they can save money by doing discrete, repeatable jobs with SLMs. As one venture-capital grandee puts it, you may need a Boeing 777 to fly from San Francisco to Beijing, but not from San Francisco to Los Angeles. “Taking the heaviest-duty models and applying them to all problems doesn’t make sense,” he says.”’

The Economist ‘Faith in God-like LLMs is waning’ September 8th 2025

A POC, MVP checklist

This comes from a colleague and friend Chris Surdak who has operationalised and scaled AI from NASA space programs to financial services. His advice?

1. Executive adoption decisions based on something other than FOMO

2. Compelling use cases – will tackle real problems and/or leverage significant opportunities

3. Honest and complete business case ROI

4. Systems engineering thinking

5. Effective test campaigns

6. Users buy-in to vision and the experiments

7. Understanding of the technology's true capabilities & the technology's true limitations

9. A backup plan if the experiment fails

10. Self-reflection over prior failures; what has changed in our approach this time?

11. Have the technology partners you chose ‘eaten their dog food’ and proved they can deliver the outcomes you desire using the tools they promote?

Further Reading

Insurance Leads in AI Adoption. Now It’s Time to Scale by BCG

Can GenAI fix insurance claims headaches? Insurtech World

https://www.wsj.com/articles/johnson-johnson-pivots-its-ai-strategy-a9d0631f

Faith in God-like large language models is waning The Economist

The insurance industry has outpaced other sectors in its early embrace of artificial intelligence. However, few insurers have delivered value at scale. Many AI programs, whether predictive or generative, have stalled because of organizational and individual resistance. The specific issues include limited business engagement, unclear roles and responsibilities, inconsistent support, and the probabilistic nature of AI, which clashes with the inherent culture of the insurance industry.

unknownx500

unknownx500